Originally posted here.

Over the last month, we have put out several Toolkit releases. The primary focus of the releases has been firming up and improving spark-submit support.

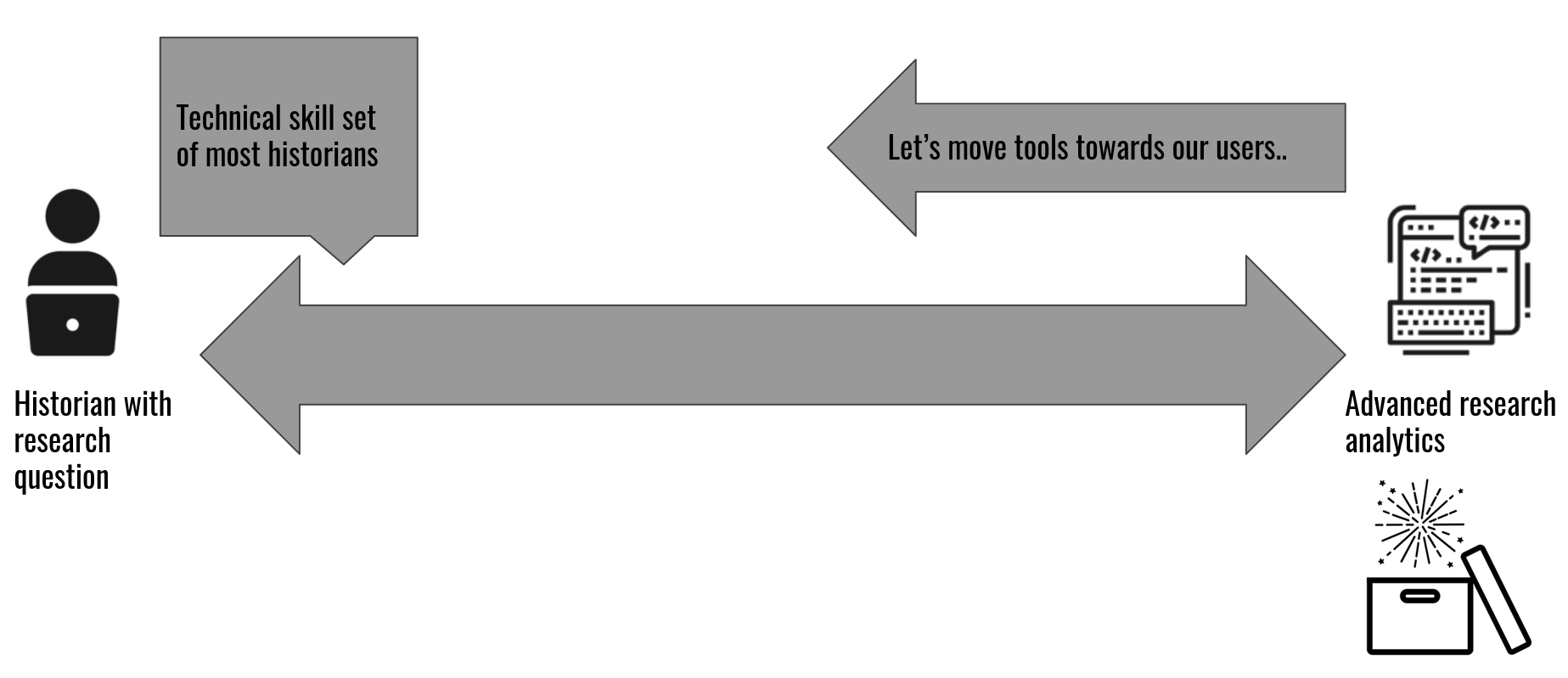

What does this mean? The short answer is that it makes the Toolkit easier to use. Think of the “Let’s move tools towards our users” graphic from my “Cloud-hosted web archive data: The winding path to web archive collections as data” post from a few weeks back. Basically, a user can extract a given type of information from a web archive collection with a single command.

The long answer is that we now have a set of fourteen “extractors” that will produce a variety of derivatives or finding aids (depending on your perspective) for a given web archive collection. Each of these extractors can be run with a single command, and do not require copying and pasting Scala scripts into spark-shell or passing Scala files to spark-shell.

A comparison makes the relative simplicity of spark-submit clear. Let’s say that we want to extract the “Domain Graph” from a web archive collection. Previously we’d have to copy and paste the following into spark-shell:

import io.archivesunleashed._

import io.archivesunleashed.df._

import io.archivesunleashed.app._

val webgraph = RecordLoader.loadArchives("/path/to/web/archives", sc).webgraph()

val graph = webgraph.groupBy(

$"`crawl_date`",

RemovePrefixWWWDF(ExtractDomainDF($"src")).as("src_domain"),

RemovePrefixWWWDF(ExtractDomainDF($"dest")).as("dest_domain"))

.count()

.filter(!($"dest_domain"===""))

.filter(!($"src_domain"===""))

.filter($"count" > 5)

.orderBy(desc("count"))

WriteGraphML(graph.collect(), "/path/to/output/gephi/example.graphml")

Now, we can just use the “Domain Frequency” extractor with spark-submit:

bin/spark-submit \

--class io.archivesunleashed.app.CommandLineAppRunner \

path/to/aut-fatjar.jar \

--extractor DomainGraphExtractor \

--input /path/to/warcs \

--output output/path \

--output-format graphml

Line continuation characters () are included here for readability.

This does not mean we are abandoning, or pivoting away from spark-shell support. On the contrary, we are still working towards a 1.0.0 release of the Toolkit that will include not only spark-shell and spark-submit support, but also pyspark parity (where everything you can do in Scala can be done in Python)!

Why would I want to use spark-submit over spark-shell? Think of the Toolkit extractors as running a command line program on a web archive collection. Conversely, using the Toolkit with spark-shell would be for users who wanted to use the Toolkit’s API to ask a specific question of a web archive collection that is not covered by one of the extractors. For example, you would use an extractor to get the entirety of the plain text. But, if you wanted to be more specific, you would use spark-shell to ask more specific questions: for example, extracting all of the French language (language filter) pages (web pages) of a given GeoCities neighborhood (URL pattern filter), containing a particular string (content filter), and returning only the text of the pages with boilerplate, HTML, and HTTP headers removed.

So, what extractors are available for use in the Toolkit, in the latest (0.70.0) release? Detailed example usage for each can be found here, and for more on the Parquet format, I discussed it in this post.

Audio information (CSV or Parquet) with the following columns:

crawl_date,url,filename,extension,mime_type_web_server,mime_type_tika,md5, andsha1.Domain frequency (CSV or Parquet) with the following columns:

domain, andcount.Domain graph (CSV, Parquet, GraphML, or GEXF) columnar output:

crawl_date,src_domain,dest_domain, andcount.Image graph (CSV or Parquet) with the following columns:

crawl_date,src,image_url, andalt_text.Image information (CSV or Parquet) with the following columns:

crawl_date,url,filename,extension,mime_type_web_server,mime_type_tika,width,height,md5, andsha1.PDF information (CSV or Parquet) with the following columns:

crawl_date,url,filename,extension,mime_type_web_server,mime_type_tika,md5, andsha1.Plain text (CSV or Parquet) with single column containing

contentwhich has Boilerplate, HTTP headers, and HTML removed.Presentation program information (CSV or Parquet) with the following columns:

crawl_date,url,filename,extension,mime_type_web_server,mime_type_tika,md5, andsha1.Spreadsheet information (CSV or Parquet) with the following columns:

crawl_date,url,filename,extension,mime_type_web_server,mime_type_tika,md5, andsha1.Text files information (CSV or Parquet) with the following columns:

crawl_date,url,filename,extension,mime_type_web_server,mime_type_tika,md5, andsha1.Video information (CSV or Parquet) with the following columns:

crawl_date,url,filename,extension,mime_type_web_server,mime_type_tika,md5, andsha1.Web graph (CSV or Parquet) with the following columns:

crawl_date,src,dest, andanchor.Web pages (CSV or Parquet) with the following columns:

crawl_date,domain(www prefix removed),url,mime_type_web_server,mime_type_tika, andcontent(HTTP headers, and HTML removed).Word processor information (CSV or Parquet) with the following columns:

crawl_date,url,filename,extension,mime_type_web_server,mime_type_tika,md5, andsha1.

If you’re a Toolkit user, we’d love for you to check out this new functionality, along with your feedback. If you’re a Cloud user, we’ve implemented a few of these extractors, and provide you with the CSV output.