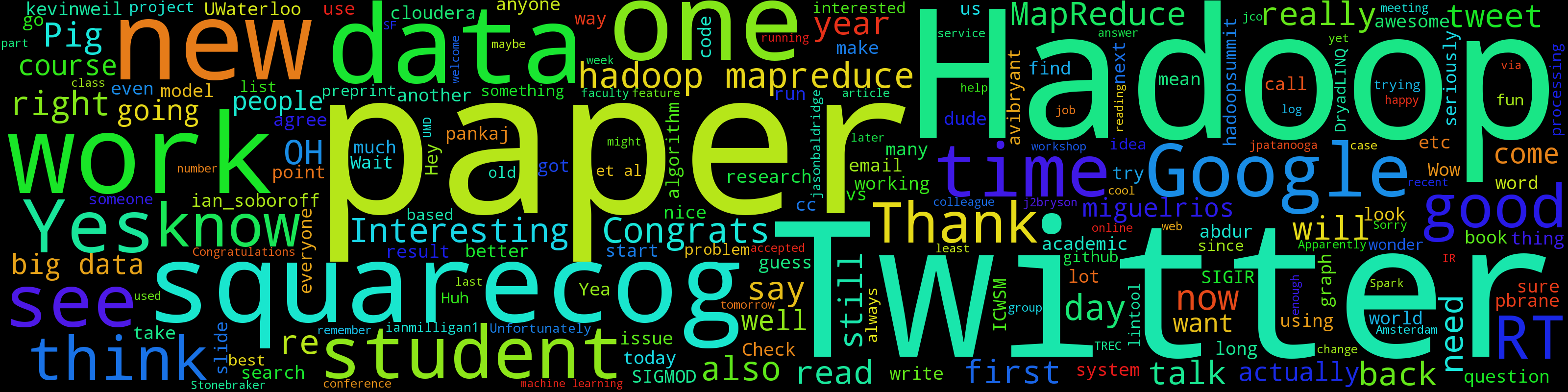

At this past week’s Archives Unleashed dataton, I jokingly created some wordclouds of my Co-PI’s timelines.

Finished my most likely bigly winning #hackarchives project: A Word Cloud of @lintool's timeline!https://t.co/eK2KPGjaGo

— nick ruest (@ruebot) April 27, 2018

Or, @ianmilligan1 #HackArchiveshttps://t.co/qMxiet0osl

— nick ruest (@ruebot) April 27, 2018

Mat Kelly asked about the process this morning, so here is a little how-to of the pipeline:

Requirements:

- twarc

- jq

- wordcloud_cli.py

- sed

Process:

Use twarc to grab some data.

$ twarc timeline lintool > lintool.jsonl

Extract the tweet text.

$ cat lintool.jsonl | jq -r .full_text > lintool_tweet.txt

Remove all the URLs from the tweets.

$ sed -e 's!http[s]\?://\S*!!g' lintool_tweet.txt > lintool.txt

Create a Wordcloud.

$ wordcloud_cli.py --text lintool.txt --imagefile lintool.png

Nota bene

- Each of these commands have a whole lot of options. Check them out, and experiment.

- Yes, there is probably a better way to do this, and you could even make it into a one-liner. I pulled this together as a favour to Mat.

- We were going to initially include wordclouds of collections in AUK, but

wordcloud_cli.pydoesn’t perform well at scale. Scale being, feeding it txt files of 5G up to 500G of raw text. Maybe one day we’ll revisit it.